Almost like a person?

ChatGPT-4o offers a friendly, flirty voice. It's a new dimension to AI, and a very powerful one. It's a call to examine fundamentals in lots of professions.

I give myself lots of time to review and revise my plans for my fall seminar on “our complex relationships with technology.” Class guests are often people whose calendars fill up months in advance, and I’m constantly faced with the matter of “currency”—whether the topics from earlier years still pertain as they once did. Some topics like social media have undergone such transformations in the brief history of the seminar that the topic during, say, fall 2020 doesn’t feel like a recognizable ancestor to the topic today.1

Noah Smith recently considered the changes in the Internet and touched on social media use among twenty-somethings. “Anecdotally, when I meet people in their early to mid 20s, they don’t want to connect over Instagram, Twitter, or Facebook Messenger, like young people did in the 2010s,” he wrote. “They just exchange phone numbers, like people did in the 2000s. Everyone is still online all the time, but ‘online’ increasingly means group chats, Discord, and other small-group interactions.” He interpreted the change as evidence that “we are re-learning how to center our social lives around a network of people we know in real life.”

I tend to agree with Smith, though social media continues to take a toll on mental well-being and sociability in general, especially among teenagers. Last year, I dropped social media as a theme in the seminar, since its currency had waned. Besides that, social media was overshadowed by the tempests that AI—and ChatGPT in particular—brought to education and pretty much everything else.

Enter the pal who can giggle and flirt

ChatGPT/AI feels different to me today than it did a year ago, and the changes present new challenges. Not entirely unexpected challenges, mind you, but formidable ones.

Last June, ChatGPT was high on hype. Now, some of the distracting fog of breathless enthusiasm and fear has blown off, at least somewhat, and the hype about its capability has become easier to dismiss. Notably, a more powerful “GPT-5” hasn’t appeared, and some speculate that current hurdles for LLMs are much higher or maybe unattainable with the current approaches. The landscape of LLMs has also levelled off, as OpenAI’s competitors have developed comparable LLMs. In doing so, GPT-4 has emerged as a de facto standard against which new LLMs are compared.

The relative lull has also clarified challenges, as many have finally grasped that linguistic prediction, which LLMs do pretty well, differs from reasoning (though AI companies use reasoning to describe their products).

But in the past week or so, a new and I think more difficult challenge has been added. It may be more distorting than the uncanny prose that mere text chatbots were able to string together, despite their frequent confabulations (aka, “hallucinations”). Rather than seeing LLMs develop as GPT-3 did on its way to GPT-4—a direction that seemed to refine capabilities—innovations have expanded the breadth of the tools. Where text once defined the “interface,” chatbots have become “multi-modal.” They utter words, they respond to voices, they can parse not just text but images, too.

Today, ChatGPT-4o can do it with a voice and manner of a twenty-something Valley Girl.

Up until this Monday, the Valley Girl voice was more like a Scarlett Johansson sound-alike. People noticed, as did Johansson, and the likeness wasn’t coincidental. For now, ChatGPT-4o lost “Sky’s” voice, which was very much like Johansson’s, but you can carry on with “Breeze,” “Cove,” “Ember” or “Juniper.” Custom GPTs can use “Shimmer.”

Apparently, Sam Altman, who is said to misread meanings of movies and fiction, counts Her (with its digital character voiced by Johansson) among his favorite movies. With a tweeted word, he drew Her into focus as ChatGPT-4o debuted.

That AI chatbots should find their voices, so to speak, isn’t surprising. That they now speak with human-like expressiveness and even seem to possess a certain “subjective entity” was predictable. The surprise is how a voice and a “multi-modal” interface redirect human calculus and response. While we could laugh at confabulations that appeared on our screens, hearing a voice introduces a human quality to the machine. Human qualities like sociability and empathy intensify. It’s the difference between texting a human from afar, and speaking with them face-to-face.

A year ago, when chatbots were still silent correspondents, I wrote

AIs do not have the capacity to feel empathy or, for that matter, have any sort of intelligence or “inner life.” Theirs is even less than the empathy of a psychopath — displayed, but empty. No, I think the emotional attachment springs from the medium presented to and perceived by humans: “chat” or competent (though not particularly eloquent) sentences in response to human statements.

A computerized texting partner was challenging enough; now we have a digital Scarlett Johansson sound-alike cooing at us. As Jay “thejaymo” Springett says, “Just because YOU know it’s a stochastic parrot doesn’t mean you’re going to treat it like one.”

ChatGPT-4o’s new capabilities clarify decisions that societies and individuals need to make in using LLMs and AI. In short, the question is “Who (or what) is in charge?” LLMs have a new voice, and they project into human lives sounding like peers. They can pull the strings of the heart, having extracted the technique from millions of examples. It hardly need be repeated that tech companies offering them have their own reasons to design sociable, witty, flirty products, and they’ve had lots of practice playing with social media users’ emotions.

Core issue: authority and control

As I crafted last year’s fall seminar, I considered three “fundamental questions” relating to using LLMs in the classroom. Now, with a bit of time to think and with the benefit of seeing the new moves of OpenAI and others, those fundamental questions have become more general, more broadly applicable. A year ago, my questions related to assessing and guiding LLMs for the sake of learning; today, the fundamental question concerns authority and control—or rather, who or what has control and for what reason? Not just for education, but for lots of human endeavors.

A couple of examples:

Teaching and learning. Josh Brake reminds us that “education is about persons.” “What we need right now is a re-invigorated conversation about what education is for,” he wrote. “Only then can we begin to think about how to embrace (or reject) generative AI in educational spaces. Not only that, but we need to have a bigger conversation about the potential impacts of embedding generative AI into educational contexts and the values inherently supported by these tools.” Josh considers fundamental questions in his ’stack, and he offers insights into how technology might fit into teaching and learning.

What about medicine, another realm where AI can make significant contribution? Does AI fundamentally challenge what it means to practice medicine or become a physician? Who will have the authority, especially if AI continues to progress as it has in the past couple of years?

Last year, Gina Kolata reported about doctors who had used ChatGPT to shape communications to patients. One of them, Dr. Gregory Moore, was shaken by what ChatGPT could offer. But, Kolata noted, “he and others say, when doctors use ChatGPT to find words to be more empathetic, they often hesitate to tell any but a few colleagues. ‘Perhaps that’s because we are holding on to what we see as an intensely human part of our profession,’ Dr. Moore said.”

An assessment published in JAMA Internal Medicine found large difference between physicians’ responses to patients and those of chatbots. The chatbots “won,” if we so bluntly put it. “Chatbot responses were rated of significantly higher quality than physician responses…. This amounted to 3.6 times higher prevalence of good or very good quality responses for the chatbot. Chatbot responses were also rated significantly more empathetic than physician responses…. This amounted to 9.8 times higher prevalence of empathetic or very empathetic responses for the chatbot.” The whole study is here.

Is Dr. Moore right in wondering about the “intensely human part of the profession”? To phrase the question as Josh did about education, “Is healthcare about persons?” AI, even in the relatively primitive form of LLMs, has shaken medicine up and may cause a whole profession to reconsider fundamentals.

I think we could probably list other examples, but this post is getting long enough.

I do want to offer a scenario that I am sure will come up. Perhaps the easiest to imagine would be a case where an AI tutor—and, yes, they are already around—and a teacher disagree. Or perhaps a parent disagrees with a teacher using AI. What if the parent prefers to give primacy to the AI?

How will people resolve such a disagreement, variants of which will certainly crop up everywhere as time goes on? How will the conversations go? And where will authority, which is a sign and substance of control, land?

Got a comment?

Tags: LLM, AI, chatbot, empathy, emotion, manipulation, power, medicine, education, voice

Links, cited and not, some just interesting

Allyn, Bobby. “Scarlett Johansson Says She Is ‘shocked, Angered’ over New ChatGPT Voice.” NPR, May 20, 2024, sec. Technology. https://www.npr.org/2024/05/20/1252495087/openai-pulls-ai-voice-that-was-compared-to-scarlett-johansson-in-the-movie-her.

I’m not sure what to make of this, and I’m not sure it’s a net benefit: “ ‘It’s not that A.I. is going to answer questions,’ Jonathan Grayer, who founded the education tech company Imagine Learning in 2011, told me. ‘What it’s going to do is change the process by which teachers teach, kids learn and parents help.’ ” Coy, Peter. “Yes, A.I. Can Be Really Dumb. But It’s Still a Good Tutor.” The New York Times, May 17, 2024, sec. Opinion. https://www.nytimes.com/2024/05/17/opinion/ai-school-teachers-classroom.html.

We're playing the role of King Thamus

In his Phaedrus, Plato recounted a visit of the Egyptian god Theuth to Thamus, the King of Egypt. Theuth had devised a new “branch of learning that will make the people of Egypt wiser and improve their memories. My discovery provides a recipe for memory and wisdom.” It was writing. The king was skeptical, and he told Theuth that though he was the invent…

Hurst, Mark. “Apple Made a Terrible Mistake: It Told the Truth.” Creative Good, May 10, 2024. https://creativegood.com/blog/24/apple-tells-the-truth.html.

Smuha, Nathalie A., Mieke De Ketelaere, Mark Coeckelberg, Pierre Dewitte, and Yves Poulette. “Open Letter: We Are Not Ready for Manipulative AI – Urgent Need for Action.” KU Leuven, March 31, 2023. https://www.law.kuleuven.be/ai-summer-school/open-brief/open-letter-manipulative-ai.

Why does Sam Altman like Her so much? Brian Merchant thinks that “Her … happens to offer the clearest vision of AI as an engine of entitlement, in which a computer delivers the user all that he desires, emotionally, secretarially, and sexually — and because the tech is normalized so quickly, fully, and painlessly. Oh and it contains a benign vision of computers achieving artificial general intelligence, or AGI, to boot.” Merchant, Brian. “Why Is Sam Altman so Obsessed with ‘Her’? An Investigation,” April 22, 2024.

Remember that awful Apple iPad ad? It’s less of a disaster in reverse. Reza Sixo Safai, I Fixed Apple’s Ad / 2 Mil Views on X. YouTube video, 2024.

Facebook is probably a good example of the slippage. Students in 2020 all used Facebook, but the social media slipped dramatically so that today it’s more likely the online outpost of their grandparents. The Pew Research Center has charted the changes (“Teens, Social Media and Technology” 2022 survey, 2023 survey). I polled my students in 2020-2023, and even though the sample could hardly have been representative or scientifically reliable, the shifts in social media behavior appeared.

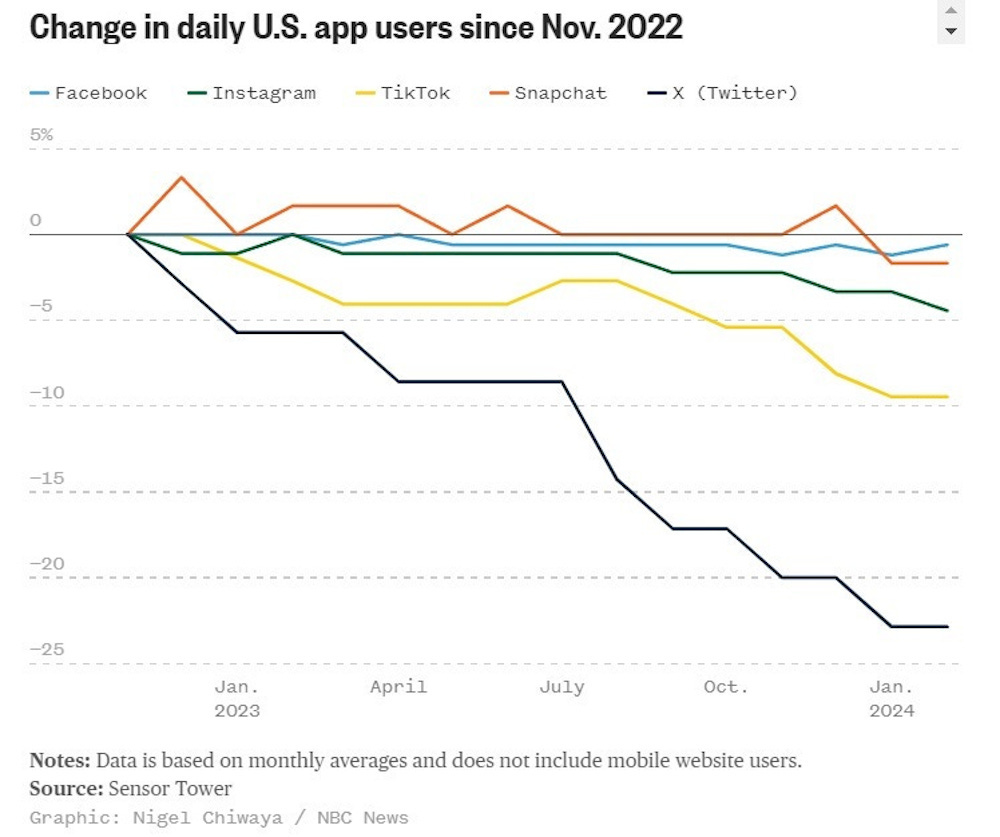

Among all “US app users” there may be a drift away from social media platforms, too, including Tiktok. Muskian Twitter/X seems to be a real loser for obvious reasons:

Continuing to wrestle the AI beast, great stuff. (Not to get ahead of myself, but book 2?)

And the fact that doctors used AI to sound more human only proves to me that we’re living in Brazil. (Not the country, the Terry Gilliam movie.)

Bravo to you for continuing to teach this incredibly challenging subject … I envy you the rationale for keeping up with all that is happening. I increasingly find that I am just watching it happen and feeling glad that I don’t need to be involved with it.